1642 – Mechanical Adder

One of the first mechanical adding machines was designed by Blaise Pascal. It used a system of gears and wheels similar to those found in odometers and other counting devices. Pascal's adder, known as the Pascaline, could both add and subtract and was invented to calculate taxes.

1694 – Gottfried Wilhelm Von Leibniz

Gottfried Wilhelm von Leibniz produced a similar machine to the Pascaline, that was more accurate and could perform all four basic arithmetic operations (addition, subtraction, multiplication, and division). Leibniz also created the binary number system used by all modern computers. The binary number system uses just 0 and 1.

1801 – Joseph Marie Jacquard

Storing data was the next challenge to be met. The first use of storing data was in a weaving loom invented by Joseph Marie Jacquard that used metal cards punched with holes to position threads. A collection of these cards coded a program that directed the loom. This allowed for a process to be repeated with a consistent result every time.

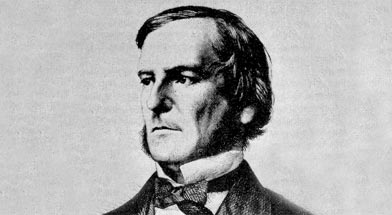

1847 – George Boole

Logic is a method of creating arguments or reasoning with true or false conclusions. George Boole created a way of representing this using Boolean operators (AND, OR, NOR) and having responses represented by true or false, yes or no, and represented in binary as 1 or 0. Web searches still use these operators today.

1890 – Herman Hollerith

Herman Hollerith created the first combined system of mechanical calculation and punch cards to rapidly calculate statistics gathered from millions of people.

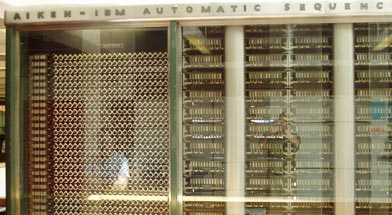

1945 – Mark I

The Mark I, built at IBM and designed by Howard Aiken, was the first combined electric and mechanical computer. The Mark I could store 72 numbers and it could perform complex multiplication in 6 seconds and division in 16. While nowhere near as fast as current computers, this is still faster than most humans.

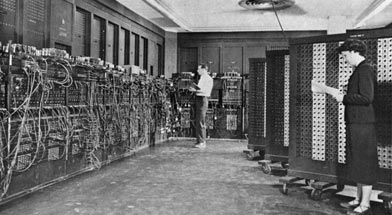

1946 – ENIAC

The first fully electronic computer was built by John Mauchly and John Eckert and named ENIAC, short for Electronic Numerical Integrator and Computer. ENIAC was a thousand times faster than the Mark I [1945]. This computer weighed about 30,000 kilograms and fit on a wall 3 meters high and 24 meters across. These days computers fit in our pockets!

1952 – Checker Program

Arthur Samuel was an IBM scientist who used the game of checkers to create the first learning program. His program became a better player after many games against itself and a variety of human players in a 'supervised learning mode'. The program observed which moves were winning strategies and adapted its programming to incorporate those strategies.

1957 – Perceptron

Frank Rosenblatt designed the perceptron which is a type of neural network. A neural network acts like your brain; the brain contains billions of cells called neurons that are connected together in a network. The perceptron connects a web of points where simple decisions are made that come together in the larger program to solve more complex problems.

1967 - Pattern Recognition

The first programs able to recognize patterns were designed based on a type of algorithm called the nearest neighbour. An algorithm is a sequence of instructions and steps. When the program is given a new object it compares this with data from the training set and classifies the object to the nearest neighbour, or most similar object in memory.

1981 – Explanation Based Learning

Gerald Dejong introduced explanation based learning (EBL) in a journal article published in 1981. In EBL, prior knowledge of the world is provided by training examples which makes this a type of supervised learning. The program analyzes the training data and discards irrelevant information to form a general rule to follow. For example, in chess if the program is told that it needs to focus on the queen, it will discard all pieces that don't have immediate effect upon her.

1990's – Machine Learning Applications

In the 1990s we began to apply machine learning in data mining, adaptive software and web applications, text learning, and language learning. Advances continued in machine learning algorithms within the general areas of supervised learning and unsupervised learning. As well, reinforcement learning algorithms were developed.

2000's – Adaptive Programing

The new millennium brought an explosion of adaptive programming. Anywhere adaptive programs are needed, machine learning is there. These programs are capable of recognizing patterns, learning from experience, abstracting new information from data, and optimizing the efficiency and accuracy of its processing and output.